|

The Java Media Framework (JMF) is a recent API for Java dealing with real-time multimedia presentation and effects processing. JMF handles time-based media, media which changes with respect to time. Examples of this are video from a television source, audio from a raw-audio format file and animations. The beta JMF 2.0 specification will be used for this report, as they currently reflect the features that will appear in the final version.

|

The JMF architecture is organized into three stages:

|

During the input stage, data is read from a source and passed in buffers to the processing stage. The input stage may consist of reading data from a local capture device (such as a webcam or TV capture card), a file on disk or stream from the network.

The processing stage consists of a number of codecs and effects designed to modify the data stream to one suitable for output. These codecs may perform functions such as compressing or decompressing the audio to a different format, adding a watermark of some kind, cleaning up noise or applying an effect to the stream (such as echo to the audio).

Once the processing stage has applied its transformations to the stream, it passes the information to the output stage. The output stage may take the stream and pass it to a file on disk, output it to the local video display or transmit it over the network.

For example, a JMF system may read input from a TV capture card from the local system capturing input from a VCR in the input stage. It may then pass it to the processing stage to add a watermark in the corner of each frame and finally broadcast it over the local Intranet in the output stage.

JMF is built around a component architecture. The compenents are organized into a number of main categories:

MediaHandlers are registered for each type of file that JMF must be able to handle. To support new file formats, a new MediaHandler can be created.

A DataSource handler manages source streams from various inputs. These can be for network protocols, such as http or ftp, or for simple input from disk.

Codecs and Effects are components that take an input stream, apply a transformation to it and output it. Codecs may have different input and output formats, while Effects are simple transformations of a single input format to an output stream of the same format.

A renderer is similar to a Codec, but the final output is somewhere other than another stream. A VideoRenderer outputs the final data to the screen, but another kind of renderer could output to different hardware, such as a TV out card.

Multiplexers and Demultiplexers are used to combine multiple streams into a single stream or vice-versa, respectively. They are useful for creating and reading a package of audio and video for saving to disk as a single file, or transmitting over a network.

The Java Media Framework provides a number of pre-built classes that handle the reading, processing and display of data. Using the Player, media can easily be incorporated into any graphical application (AWT or Swing). The Processor allows you to control the encoding or decoding process at a finer level than the Player, such as adding a custom codec or effect between the input and output stages.

The Player class is an easy way to embed multimedia in an application. It handles the setup of the file handler, video and audio decoders, and media renderers automatically. It is possibly to embed the Player in a Swing application, but care must be taken as it is a heavy-weight component (it won?t clip if another component is placed in front of it).

import java.applet.*;

import java.awt.*;

import java.net.*;

import javax.media.*;

public class PlayerApplet extends Applet {

Player player = null;

public void init() {

setLayout( new BorderLayout() );

String mediaFile = getParameter( "FILE" );

try {

URL mediaURL = new URL( getDocumentBase(), mediaFile );

player = Manager.createRealizedPlayer( mediaURL );

if (player.getVisualComponent() != null)

add("Center", player.getVisualComponent());

if (player.getControlPanelComponent() != null)

add("South", player.getControlPanelComponent());

}

catch (Exception e) {

System.err.println( "Got exception " + e );

}

}

public void start() {

player.start();

}

public void stop() {

player.stop();

player.deallocate();

}

public void destroy() {

player.close();

}

}

In this case, we are using the static createRealizedPlayer() function of the Manager class to create the Player object. This ensures that the visual and control panel components are created before it gets added to the window by blocking until then. It is also possible to create an unrealized player and implement the ControllerEventHandler interface. The window then waits for the controllerUpdate event to fire and adds the components to the layout as they are realized:

public synchronized void controllerUpdate( ControllerEvent event ) {

if ( event instanceof RealizeCompleteEvent ) {

Component comp;

if ( (comp = player.getVisualComponent()) != null )

add ( "Center", comp );

if ( (comp = player.getControlPanelComponent()) != null )

add ( "South", comp );

validate();

}

}

Using a simple applet tag, a multimedia stream can easily be embedded in a webpage:

<APPLET CODE=PlayerApplet WIDTH=320 HEIGHT=300>

<PARAM NAME=FILE VALUE="sparkle2.mpeg">

</APPLET>

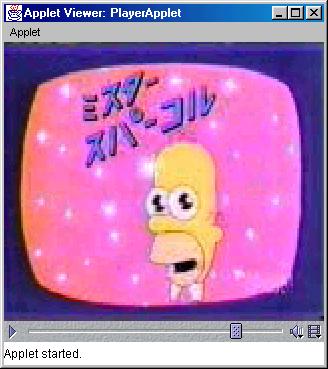

This will create an applet with an embedded MPEG video stream:

This can also be used to embed multimedia content in an HTML file, as shown below. Previously, browser-specific plugins were required.

The Player can be easily used in a Swing application as well. The following code creates a Swing-based TV capture program with the video output displayed in the entire window:

import javax.media.*;

import javax.swing.*;

import java.awt.*;

import java.net.*;

import java.awt.event.*;

import javax.swing.event.*;

public class JMFTest extends JFrame {

Player _player;

JMFTest() {

addWindowListener( new WindowAdapter() {

public void windowClosing( WindowEvent e ) {

_player.stop();

_player.deallocate();

_player.close();

System.exit( 0 );

}

});

setExtent( 0, 0, 320, 260 );

JPanel panel = (JPanel)getContentPane();

panel.setLayout( new BorderLayout() );

String mediaFile = "vfw://1";

try {

MediaLocator mlr = new MediaLocator( mediaFile );

_player = Manager.createRealizedPlayer( mlr );

if (_player.getVisualComponent() != null)

panel.add("Center", _player.getVisualComponent());

if (_player.getControlPanelComponent() != null)

panel.add("South", _player.getControlPanelComponent());

}

catch (Exception e) {

System.err.println( "Got exception " + e );

}

}

public static void main(String[] args) {

JMFTest jmfTest = new JMFTest();

jmfTest.show();

}

}

To capture audio, the specified sampling frequency, sample size and number of channels must be specified. JMF will attempt to locate any devices which will support this format and return a list of all that match.

CaptureDeviceInfo di = null;

Vector deviceList = CaptureDeviceManager.getDeviceList(

new AudioFormat( "linear", 44100, 16, 2 ) );

if ( deviceList.size() > 0 )

di = (CaptureDeviceInfo)deviceList.firstElement();

Processor p = Manager.createRealizedProcessor(di.getLocator());

DataSource source = p.getDataOutput();

The source object returned from the Processor can then be turned into a Player object by calling Manager.createPlayer(). To capture it to an audio file instead, a DataSink can take the data instead:

DataSink sink;

MediaLocator dest = new MediaLocator("file://output.wav");

try {

sink = Manager.createDataSink(source, dest);

sink.open();

sink.start();

} catch (Exception e) { }

The combined source above will take input from the first matching audio device (usually a microphone) and stream it to a wave file on the local filesystem.

Capturing video is identical to capturing audio. Most video sources have an accompanying audio track that must be encoded as well, so we must create a compound destination file.

Format formats[] = new Format[2];

formats[0] = new AudioFormat( "linear", 44100, 16, 2 );

formats[1] = new VideoFormat( "cvid "); // Cinepak video compressor

Processor p;

try {

p = Manager.createRealizedProcessor( new ProcessorModel( formats, null ) );

} catch ( Exception e ) { }

DataSource source = p.getDataOutput();

MediaLocator dest = new MediaLocator( "file://output.mov" );

DataSink filewriter = null;

try {

filewriter = Manager.createDataSink( source, dest );

filewriter.open();

filewriter.start();

} catch ( Exception e ) { }

p.start();

This source will create a Quicktime-format file called "output.mov" with an audio track encoded raw and a video track encoded with the Cinepak compressor.

JMF is a highly flexible multimedia architecture that shows a lot of promise. In the future, hopefully Sun will work on making it more stable as well as on documenting the framework and providing more example code. The support for Video For Windows (VFW) makes it a good contender for future multimedia applications. In its current state, it is usable, but the lack of information makes it difficult to create a complex program.

JMF 2.0 Programmer's guide: http://java.sun.com/products/java-media/jmf/2.0/jmf20-08-guide.pdf